BotaniMate: Affective Interaction with Plant’s Data in MR

Project Date: 2024 - 2025

이다영, 주진호

Tools: Meta Quest 3, NodeMCU

Showcase: 2025 ISMAR Demo

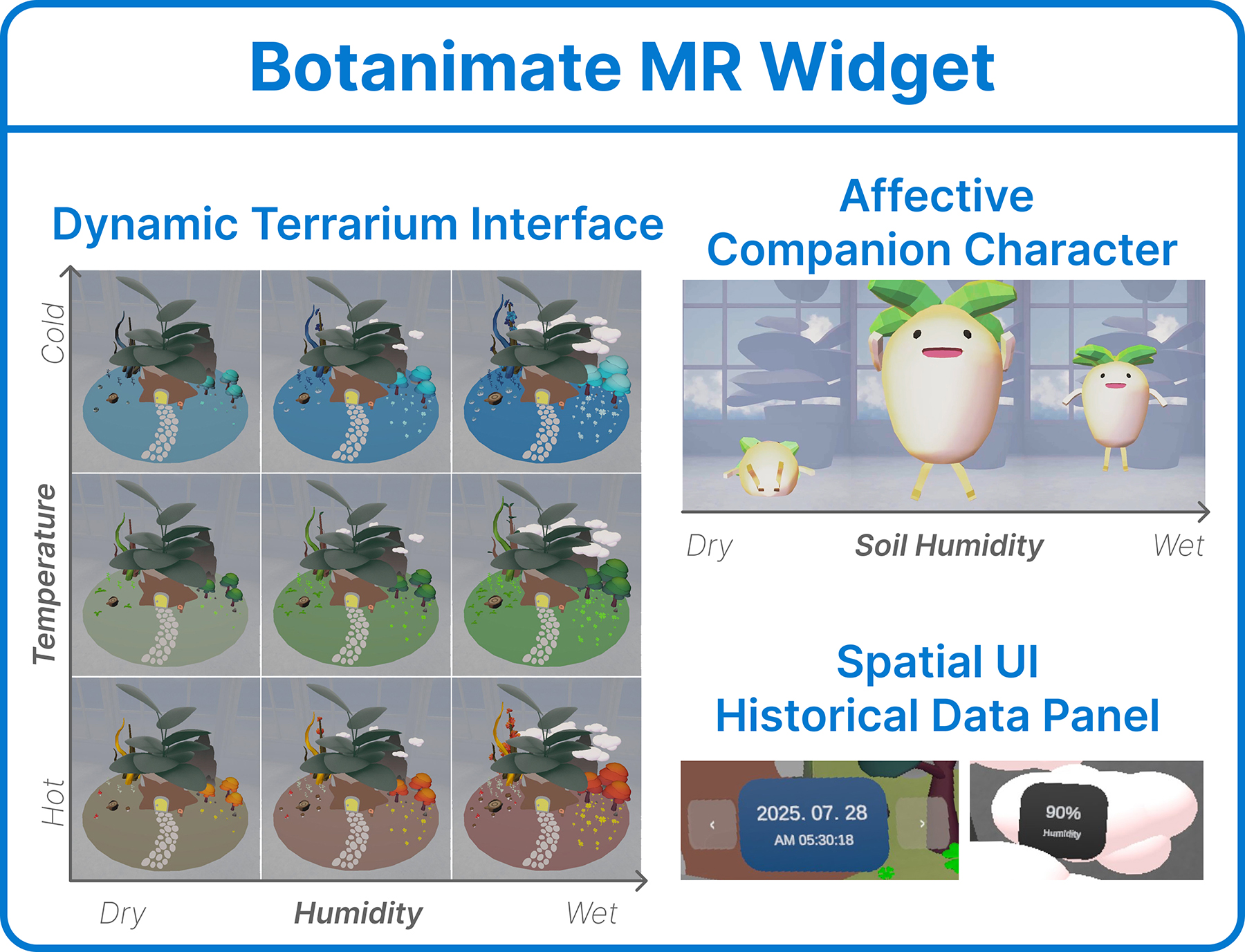

BotaniMate is a mixed reality (MR) widget that visualizes realtime

IoT plant data through an expressive virtual character and a

dynamic terrarium interface. By translating temperature and soil

moisture readings into environmental changes within the terrarium

and corresponding character behaviors, the system fosters emotional

connection and intuitive understanding of plant data monitoring

and care. Users interact using natural hand gestures and navigate

historical data through spatial UI elements. Integrating IoT

sensing, cloud storage, and Unity-based MR visualization on Meta

Quest 3, BotaniMate transforms abstract sensor data into playful,

affective experiences—enhancing empathy, memory, and engagement

in everyday plant care.

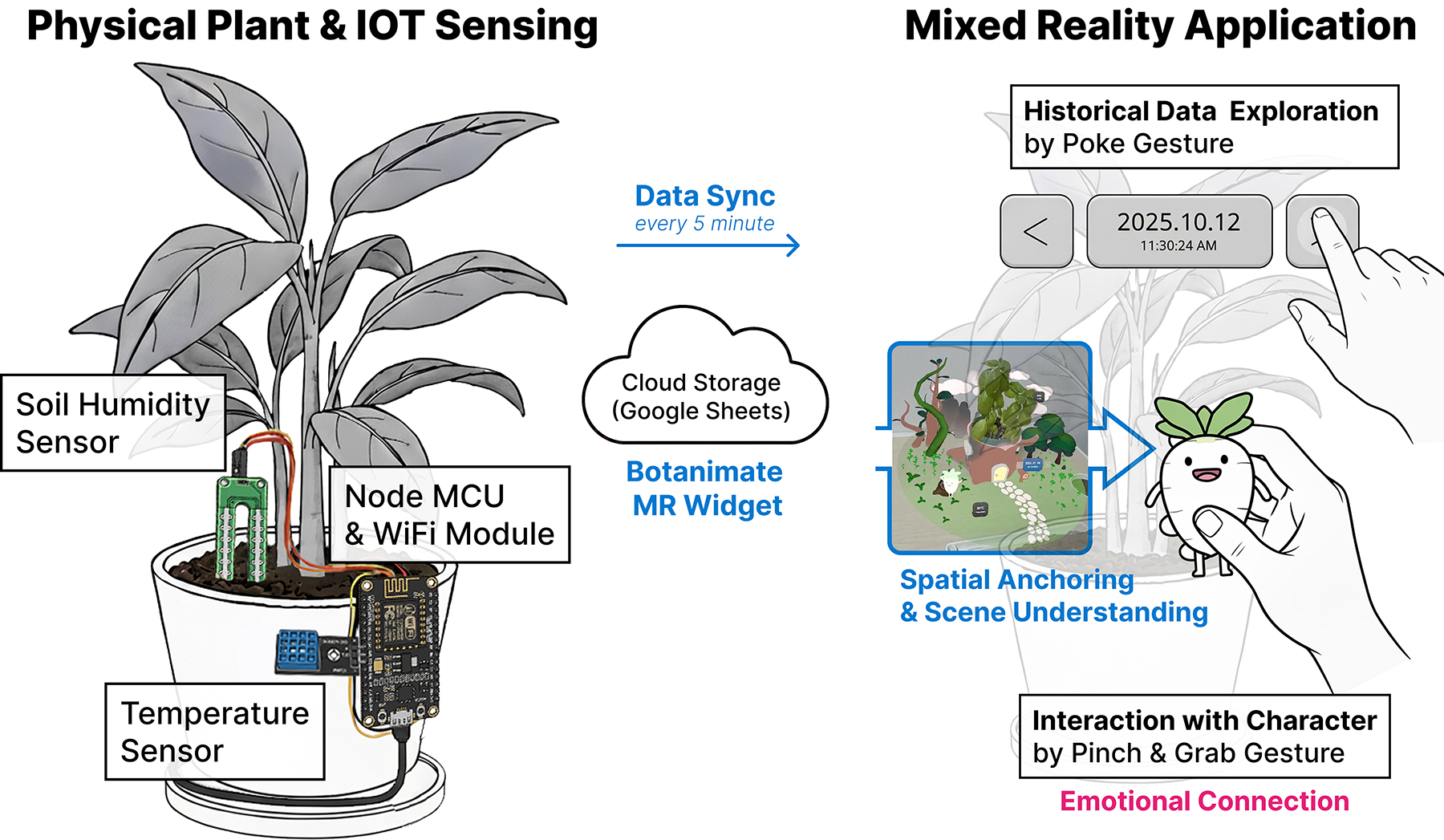

The IoT system continuously

monitors soil humidity and temperature, synchronizing the

data to cloud storage every five minutes, where it is then reflected

in the MR spatial widget. A virtual MR terrarium is overlaid above

the physical plant’s location, as pre-registered by the user, ensuring

spatial alignment between the virtual and real-world environments.

Environmental conditions are visualized through ambient spatial

cues. Temperature is expressed through color gradients in the terrarium

environment—shifting between warm and cool tones. Humidity

is reflected through changes in atmospheric density, vegetation

growth, and cloud volume.

At the center of the interface

is an interactive companion character that responds to changes in

the plant’s condition through expressive behaviors: when humidity

is high, it jumps energetically; when moderate, it runs and hops

lightly; and when low, it lies down and exhibits tantrum-like gestures

to signal distress. These allow users to emotionally interpret

plant conditions at a glance. The character can be picked

up, moved, and repositioned using natural hand gestures—such

as pinch and grab—enhancing user engagement and interactivity.

BotaniMate transforms sensor data into emotionally resonant

“memory moments.” Users can explore historical data through a

mailbox-style UI panel by poking left and right arrow controls,

allowing them to navigate through past days and revisit previous

states of their plant’s environment.

The IoT sensor system is built using a NodeMCU board with a Wi-

Fi module, programmed via the Arduino IDE. A soil humidity sensor

and a temperature sensor continuously track environmental conditions,

with data automatically synchronized every five minutes to

Google Sheets for cloud storage. This real-time sensor data is then

retrieved in Unity and rendered as an immersive MR experience

on the Meta Quest 3. Meta Quest 3’s Depth API enables realistic

occlusion, allowing virtual elements to appear naturally within the

physical environment—for example, behind furniture or the user’s

hand. The system also supports natural, controller-free interaction

through Meta Quest’s hand-tracking and Unity’s Hand Grab Interactor,

enabling users to intuitively pick up, move, and reposition the

virtual character. The virtual terrarium environment is modeled in

Blender, while the character model and animations are created in

Maya.

The authors wish to thank Chaewon Shin for her contribution to

the development of the initial prototype. This work was supported

by Korea Institute for Advancement of Technology(KIAT) grant

funded by the Korea Government(MOTIE)(RS-2025-02304167,

HRD Program for Industrial Innovation).